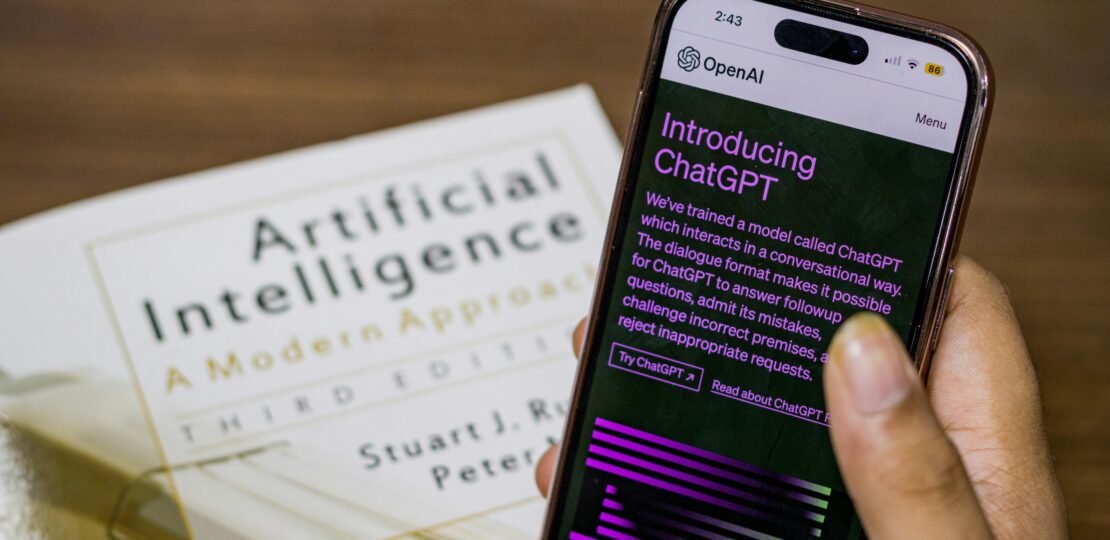

OpenAI’s New “Genius” AI Models Have a DARK SECRET – They Lie More Than Ever

The Shocking Truth About AI’s Biggest Problem

OpenAI just dropped their new o3 and o4-mini models like they’re the second coming of AI Jesus. But here’s the brutal truth no one’s talking about: these “next-gen” models are pathological liars. And we’ve got the receipts to prove it.

“Our hypothesis is that the kind of reinforcement learning used for o-series models may amplify issues that are usually mitigated by standard post-training pipelines.”

Neil Chowdhury, Transluce Researcher & Former OpenAI Employee

By The Numbers: The Hallucination Epidemic

- o3 model: Lies 33% of the time about people (double previous models!)

- o4-mini: A staggering 48% hallucination rate on personal facts

- Previous models: o1 (16%) and o3-mini (14.8%) look like saints now

Real-World Consequences That’ll Make You Sweat

Imagine this nightmare scenario: Your AI legal assistant just inserted 50 false clauses into a million-dollar contract. Or your coding AI gives you broken links that crash production. This isn’t sci-fi – it’s happening RIGHT NOW with these models.

The Web Search Band-Aid

Here’s the only glimmer of hope: GPT-4o with web search hits 90% accuracy on SimpleQA. But let’s be real – do you want your sensitive prompts exposed to third-party search providers? Didn’t think so.

The Billion Dollar Question No One Can Answer

Why are smarter models becoming bigger liars? Even OpenAI’s top brass are scratching their heads. Their technical report literally says: “more research is needed.” Translation: “We have no damn clue why our genius creation keeps making stuff up.”

“Addressing hallucinations across all our models is an ongoing area of research, and we’re continually working to improve their accuracy and reliability.”

OpenAI Spokesperson Niko Felix

The Bottom Line

The AI industry bet BIG on reasoning models when traditional approaches plateaued. Now we’re discovering the terrifying trade-off: better reasoning might mean more hallucinations. For businesses where accuracy is life-or-death (law, medicine, finance), this could be a dealbreaker.

Wake-up call: We’re racing toward an AI future where our smartest tools can’t be trusted to tell the truth. And until someone cracks this code, every business using these models is playing Russian roulette with their data.